Snakes are scary. Venomous snakes are scarier. Perhaps that’s why, years ago, the British governor of colonial Delhi wanted to rid the city of cobras. In an attempt to accomplish this, he introduced a bounty: turn in a cobra skin, get some cash.

The governor was chagrined to discover that enterprising Indians began farming cobras to collect the bounty. Realizing that the program had backfired, the British canceled it, leaving the farmers with a lot of snakes and no end market. As such, the snakes were released, increasing the venomous cobra population of Delhi. The attempted solution made the problem worse, a class of unintended consequences known as the cobra effect.

Unfortunately, venom isn’t confined to poisonous snakes. Neither are unintended consequences.

Venom, vitriol, misinformation, and other types of bunk flourish online. Facebook has been at the center of a number of controversies in this area. For example, foreign interference in the 2016 US Presidential election. Links to fake news sites were one of the problems in 2016, notes Alex Stamos, former Chief Security Officer at Facebook and current director of the Sanford Internet Observatory, in this podcast with Kara Swisher.

In the aftermath of the election brouhaha, Facebook adjusted its algorithm to serve more user generated content and fewer links to outside sources. Posts that spurred conversation were given higher visibility in News Feed. As an area rich in user generated content, post from Facebook Groups benefited from the algorithm change.

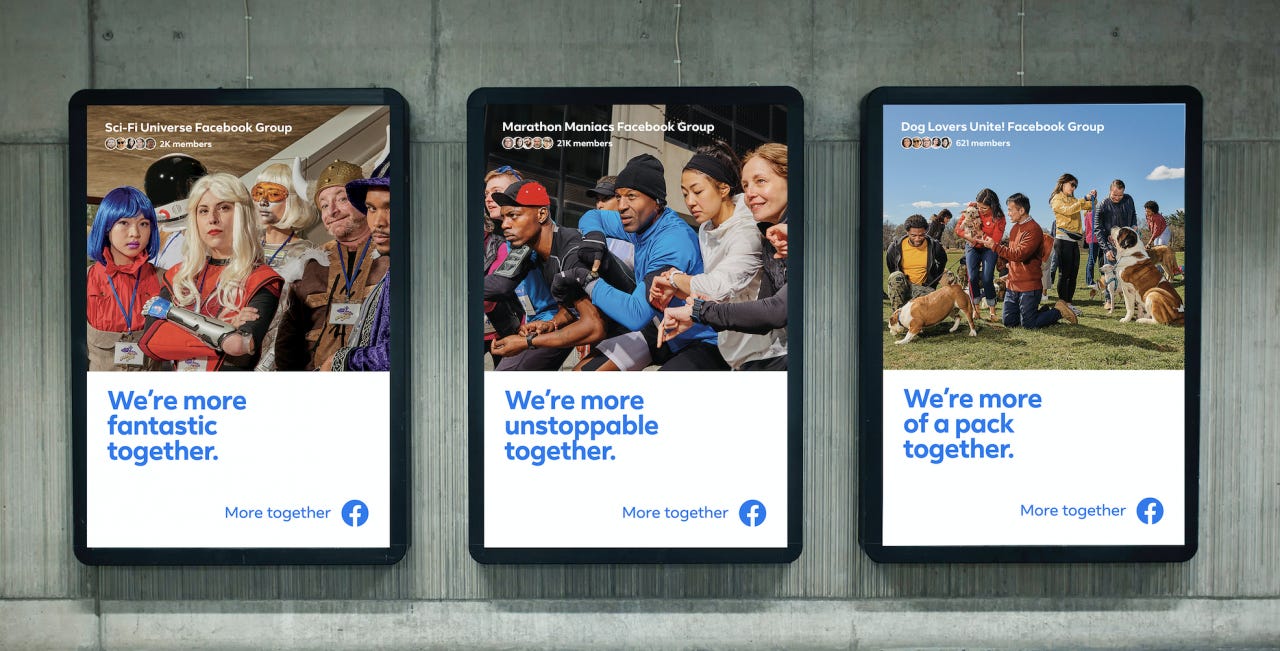

Over 2.7 billion people use Facebook properties every month, so Facebook Groups span the spectrum of human interest from the heartwarming to the despicable. Examples include dog lovers, marathon runners, and sci-fi enthusiasts. Also, QAnon.

Source: Facebook “More Together” ad campaign.

Infinite scroll makes News Feed an insatiable beast requiring constant feeding. To grease the wheels of engagement and content creation, Facebook algorithmically suggests people to friend and groups to join. All social media companies make recommendations like this. Here’s where the law of unintended consequences strikes again. Returning to the Alex Stamos interview:

I think, first off, the core product problem here was group recommendations...And I think one of things that isn’t well understood on the outside is how much these things are doven into and how much data is created on each of these issues before a decision is made. And one of the little things that leaked out was, there was apparently a report that said that of people who joined extremist groups, something like 60% of the people who joined that joined it because of the recommendation algorithm.

Oops.

Seeking an antidote to one type of digital venom, Facebook inadvertently encouraged another.

As the world grows more connected and more complex, the potential for unintentional consequences increases. Things become more worrisome when internet scale is added into the mix. While the cobras were a problem in Delhi, unintended consequences on Facebook could impact hundreds of millions or billions of people.

For more like this once a week, consider subscribing 👇

👉 If you enjoyed reading this post, feel free to share it with friends!